Comparing Information Retrieval Evaluation Measures

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

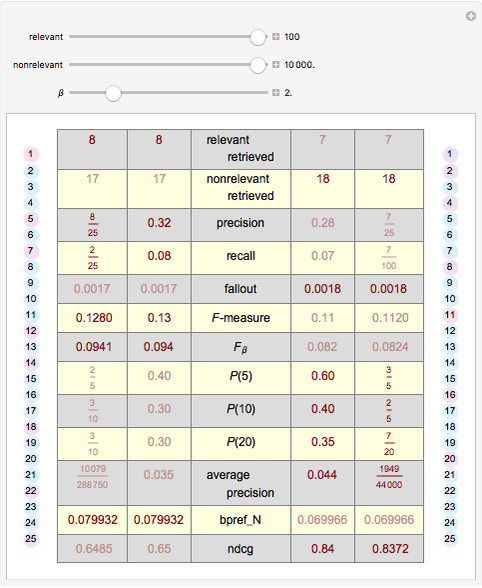

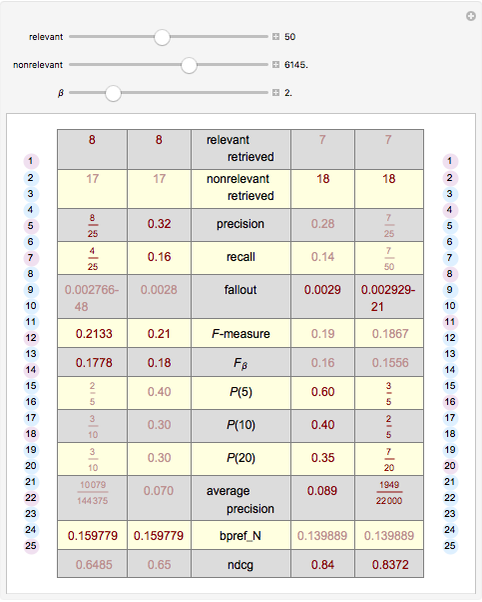

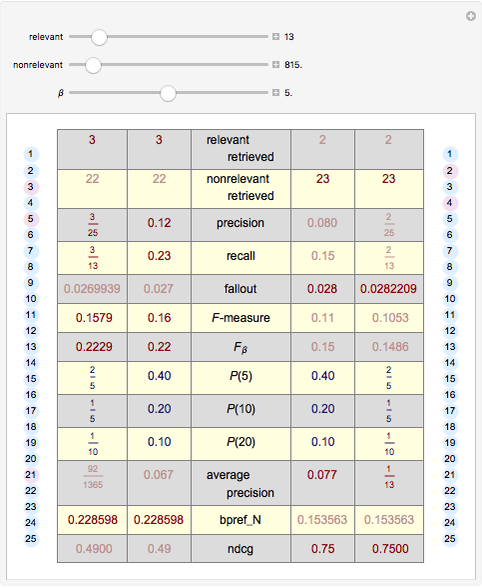

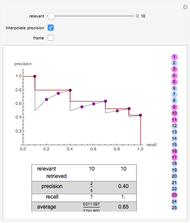

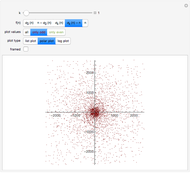

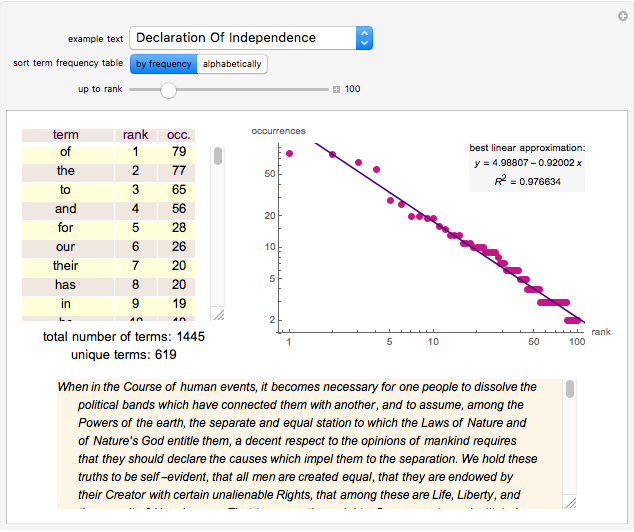

Compare some common evaluation measures for information retrieval on the results given by two systems to the same query. It is assumed that both systems retrieve the same number of results for that query; this number can be considered as a cutoff value.

[more]

Contributed by: Giovanna Roda (March 2011)

Open content licensed under CC BY-NC-SA

Snapshots

Details

References:

Wikipedia, "Information Retrieval".

E. M. Voorhees, "The Philosophy of Information Retrieval Evaluation," in: Evaluation of Cross-Language Information Retrieval Systems, Lecture Notes in Computer Science 2001, pp. 143–170.

T. Sakai, "Alternatives to Bpref," SIGIR '07 Proceedings, ACM, 2007 pp. 71–78.

Permanent Citation

"Comparing Information Retrieval Evaluation Measures"

http://demonstrations.wolfram.com/ComparingInformationRetrievalEvaluationMeasures/

Wolfram Demonstrations Project

Published: March 7 2011