Solving the Hole in the Square Problem with a Neural Network

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

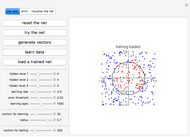

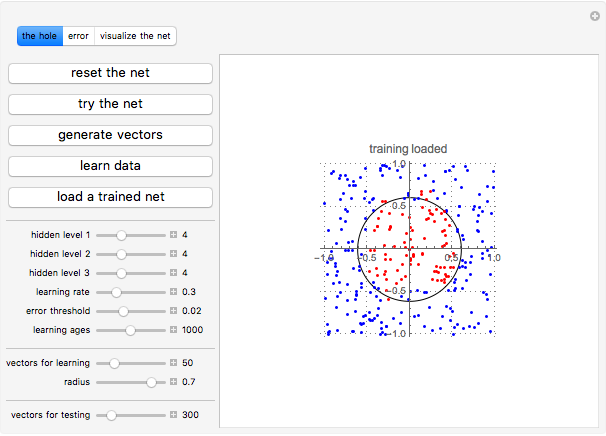

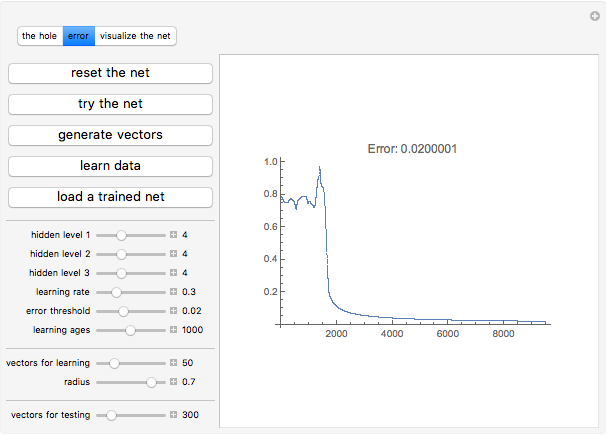

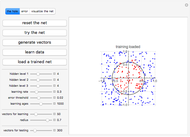

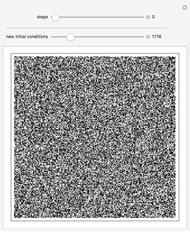

This Demonstration shows a neural network with backpropagation error. The net is tested with the problem of "the hole in a square", which means that given the coordinates of a random point, the neural network should determine whether the point is in the hole or not.

Contributed by: Luca Zammataro (May 2010)

Open content licensed under CC BY-NC-SA

Snapshots

Details

Background

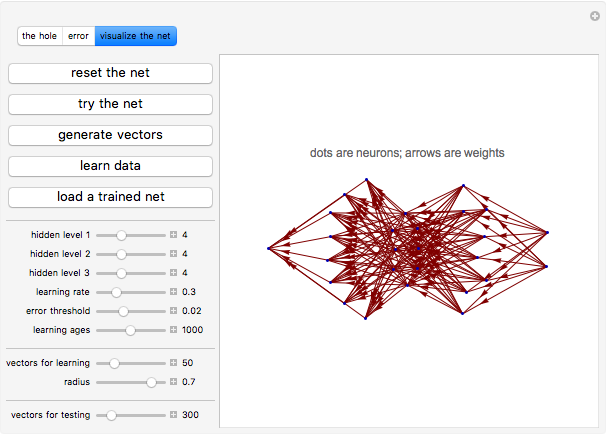

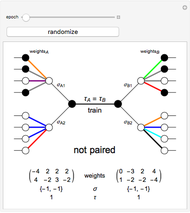

The neural network architecture is composed of two input neurons, one output neuron, and three hidden neuron levels, each with eight units. The learning properties are based on a backpropagation error function, which is a method of teaching neural networks how to perform a given task. It was first described by Arthur E. Bryson and Yu-Chi Ho in 1969: "The most popular method for learning in multilayer networks is called Back-propagation. It was first invented in 1969 by Bryson and Ho, but was more or less ignored until the mid-1980s." [6]

Summarizing, a neural network can learn information from a dataset that we call a "training sample". In this sense, artificial neural networks were inspired by natural neural networks, given their intrinsic property to learn from experience. The backpropagation error algorithm is able to compare the network's output to the desired output from the training set, calculating the error in each output neuron. Then, for each neuron, the algorithm can adjust the connection (using weights) among the neurons, also considering an "activation function", such as in natural neurons.

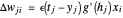

During the learning phases, neurons can adjust the weights among them, following the so-called "delta rule", which is a gradient descent learning rule:

.

.

Here  is the epsilon constant (the learning rate),

is the epsilon constant (the learning rate),  is the neuron's activation function,

is the neuron's activation function,  is the target output,

is the target output,  is the weighted sum of the neuron's inputs,

is the weighted sum of the neuron's inputs,  is the actual output, and

is the actual output, and  is the

is the  input. The activation function is the derivative of sigmoid function

input. The activation function is the derivative of sigmoid function  where

where  is the weighted sum leaving the neuron of all

is the weighted sum leaving the neuron of all  input values, multiplied by their respective weights

input values, multiplied by their respective weights  .

.

The Hole in a Square Problem

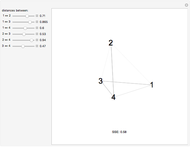

The question is: given  , can the neural network recognize if

, can the neural network recognize if  ?

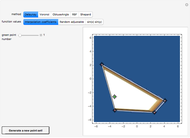

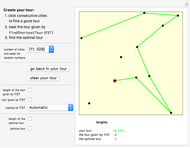

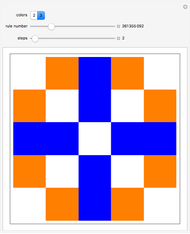

This Demonstration shows how the neural network is able to classify random points after a dataset training; when the net "thinks" the random vectors lie inside the hole, it displays these vectors in red.

?

This Demonstration shows how the neural network is able to classify random points after a dataset training; when the net "thinks" the random vectors lie inside the hole, it displays these vectors in red.

Trying the Net

Clicking the "reset the net" button resets the net; it randomizes all the network weights (connection among neurons) and sets all neurons to 0. After resetting, when you click the "try the net" button, a series of random vectors for testing the net is produced. We want to use these vectors to demonstrate if our net understands the formula  . All these vectors represent the "testing vector set" of the neural network.

. All these vectors represent the "testing vector set" of the neural network.

In fact, when we initialize the net, and click the "try the net" button, the output obtained is represented by points that are not recognized. And you can see this on the square: all dots are blue because the neural network is not able to "guess" any relationships between the vectors and their relative outputs.

Summarizing, the net is not able to recognized points within the circle (conventionally points inside the circle are red; points outside are blue) when the net loads the vectors into its input neurons.

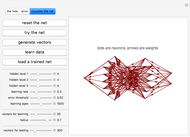

At this point, if you click the "load a trained net" button, the net will be loaded with weights adjusted by means of the backpropagate function. (These weights were obtained by a previous learning when this Demonstration was written.) In the program, these weights are represented by the arrays W1, W2, W3, and W4. W1 represents the weights among input neurons (X) and the first level of hidden neurons (HID1); W2 represents weights among HID1 and HID2; W3 represents weights among HID2 and HID3; and W4 represents weights among W4 and output neurons (Y).

After the loading of the trained weights, click the "try the net" button and observe that the vectors inside the circle become red. This is because the learning in the neural weights has been loaded.

You can increase or decrease the number of random points to test with "vectors for testing". Then click "try the net" again to see the results.

You can erase the information in the net by initializing again with the "reset the net" button. Now if you click "try the net" the output is again random.

Learning

You can train the net with a series of sample patterns: we can call this set of patterns a training set because it is composed of a series of vectors  and their expected outputs: red if the point is inside the circle and blue if it is outside. In the training set, output = red corresponds to zero, while output = 1 corresponds to blue.

and their expected outputs: red if the point is inside the circle and blue if it is outside. In the training set, output = red corresponds to zero, while output = 1 corresponds to blue.

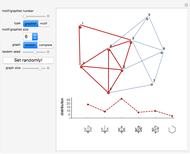

1. Click the "reset the net" button. This is important; it initializes all the neurons and weights.

2. Generate a random training set of vectors by clicking the "generate vectors" button. The program lets you choose the dimension of the learning dataset and the radius of the hole acted on with the "vectors for learning" and the "radius" controls. Clicking the "generate vectors" button, you can generate a random set of ( ,

, ) vectors. A map of colored points is shown. (In the Mathematica program, the array of the random patterns and outputs generated is called HOLE.)

) vectors. A map of colored points is shown. (In the Mathematica program, the array of the random patterns and outputs generated is called HOLE.)

4. Choose a learning step around 20, manipulating the "learning ages" control.

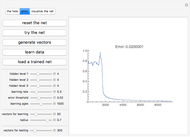

5. Finally, click the "learn data" button. A plot of the error descent will appear in Cartesian axes. During the learning phase, the net will load the vectors in its input neurons and the relative outputs in the output neuron. Clicking the "learn data" button runs the backpropagate function that adjusts each weight during the comparison of what the net tries to guess, step by step, and the output is stored in the training dataset.

When you start the learning phase of the net by clicking the "learn data" button, you can only train the net for 20 steps, so you must click many times to reach a 0.02 threshold of accepted error. This limitation depends on the Manipulate function, which does not permit long calculations.

But you can avoid this inconvenience by copying the program to Mathematica and evaluating the following function: Learn2[HOLE, 1000000]. Learn2 works showing time steps and error descent. The first argument is the name of the dataset; the second argument is the number "age" for training. Remember to first initialize the net by clicking the "reset the net" button; then you can start the learning.

6. When the learning phase is finished (generally when the error descent has reached a value around 0.02), switch on the visualization by clicking "the hole" button (which is the default visualization), and then try the net by clicking the "try the net" button; the net should recognize points within the hole, coloring them red. Try the net with different testing vector sets by clicking the relevant button.

You can choose the architecture of your net by changing the number of hidden neurons using the three controls for hidden level. Changing the net architecture can have effects on learning. Click the "reset the net" and "try the net" buttons to create your new net architecture.

To change the learning rate, use the "learning rate" control.

References:

[1] J. A. Freeman and D. M. Skapura, Neural Networks Algorithms, Applications, and Programming Techniques, New York: Addison–Wesley, 1991.

[2] R. Rojas, Neural Networks: A Systematic Introduction, New York: Springer, 1996.

[3] Wikipedia, "Backpropagation."

[4] T. Mitchell, Machine Learning, McGraw Hill, 1997.

[5] B. Krose and P. van der Smagt, An Introduction to Neural Networks, Amsterdam: The University of Amsterdam.

[6] S. Russell and P. Norvig, Artificial Intelligence: A Modern Approach, Upper Saddle River, NJ: Prentice Hall, 2003, p. 578.

[7] A. E. Bryson and Y.-C. Ho, Applied Optimal Control: Optimization, Estimation, and Control, New York: Blaisdell, 1969, p. 481.

Permanent Citation