The Multi-Arm Bandit

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

You are given two biased coins, but you do not know the bias of either coin. There is a reward each time you successfully get heads, with the amount of the reward decreasing with each coin flip. This Demonstration shows the outcomes of a series of flips, each made by selecting a coin with one of the two choice buttons. The outcomes determine the total reward and provide information on the biases of the two coins, which may guide future choices. A good coin-picking strategy accumulates the greatest reward by balancing the need to explore which coin is more likely to succeed with exploiting the coin that seems best so far.

Contributed by: Tad Hogg (December 2011)

Open content licensed under CC BY-NC-SA

Snapshots

Details

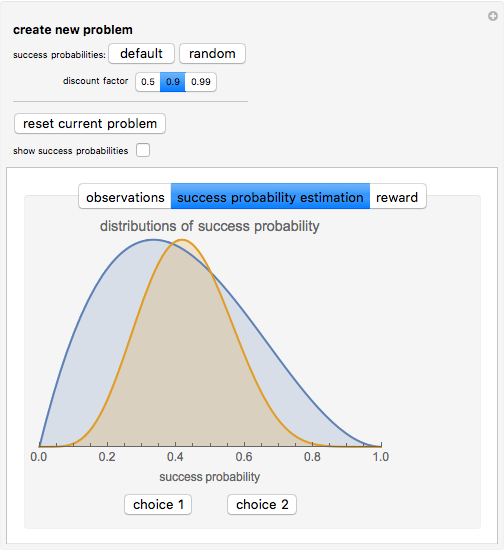

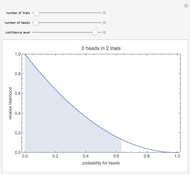

Snapshot 1: This shows posterior distributions of success probabilities. Choice 2, with 5 successes out of 12 trials, appears better than choice 1, with 1 success out of 3 trials. But choice 1 has a larger chance for high success probabilities so it may be worth exploring further.

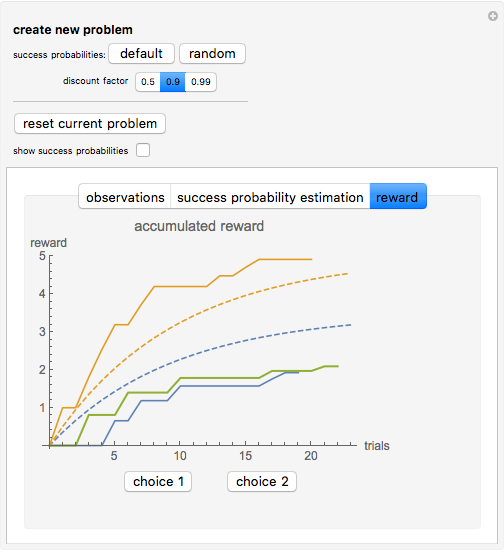

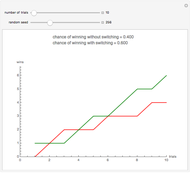

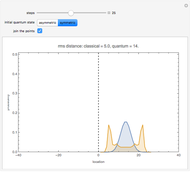

Snapshot 2: This shows accumulated rewards (solid curves) from three repetitions of a single problem. For comparison, the upper and lower dashed curves show the expected reward of the best and random choices, respectively.

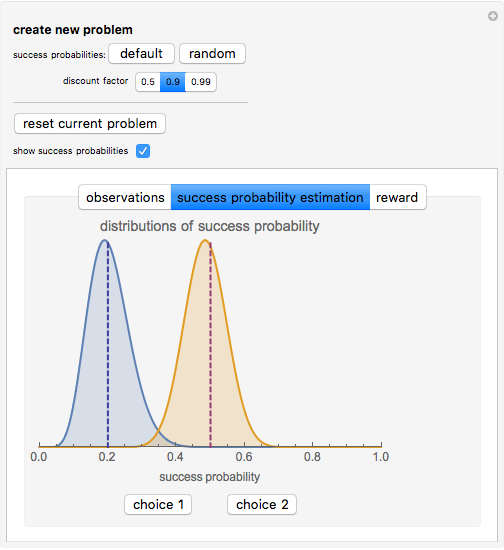

Snapshot 3: After many trials of each choice, the posterior distributions concentrate around the actual values, indicated by the vertical dashed lines. Using this many trials to reduce uncertainty of the success probabilities also reduces the expected reward due to the many trials with the worse coin.

The accumulated reward is  after

after  flips, where

flips, where  , between 0 and 1, is the reward discount factor and

, between 0 and 1, is the reward discount factor and  if the

if the  flip was heads and

flip was heads and  if it was tails. The largest possible reward occurs when every flip gives heads, with the accumulated reward approaching

if it was tails. The largest possible reward occurs when every flip gives heads, with the accumulated reward approaching  after many trials.

after many trials.

When  is small, success on the first few flips is important: there is not much future gain to be had from improved information. On the other hand, when

is small, success on the first few flips is important: there is not much future gain to be had from improved information. On the other hand, when  is near 1, devoting early trials to exploring which coin is better can pay off significantly during many later trials.

is near 1, devoting early trials to exploring which coin is better can pay off significantly during many later trials.

The "reset current problem" button allows starting over with the same two coins. To create a new problem, select either "default" or "random" values for the biases of the two coins and a "discount factor  ," using the top controls. Checking the "show success probabilities" box displays the actual bias for each coin, thereby removing the uncertainty in bias values that is a key aspect of bandit problems.

," using the top controls. Checking the "show success probabilities" box displays the actual bias for each coin, thereby removing the uncertainty in bias values that is a key aspect of bandit problems.

The "observations" tab in the Demonstration shows the total successes and failures for each choice and the accumulated reward.

The "success probability estimation" tab shows the posterior distribution of the actual success probabilities of the two coins, starting from a uniform prior distribution. The outcome from each flip of a coin updates the distribution for that coin according to Bayes' theorem.

The "reward" tab shows the accumulated reward for the trials with the current problem. It compares these actual rewards with expected reward (i.e., average over many repetitions) of making the best or random choices, with the upper and lower dashed curves, respectively.

If the biases of the coins were known, the best strategy would be to always pick the coin with the higher success probability. However, the person flipping the coins does not know the biases. Thus choices involve a trade-off between gaining information about the coins' biases (exploration) and gaining rewards as soon as possible, i.e., before the discount factor significantly reduces the reward from each success (exploitation).

Strategies emphasizing exploration include selecting coins randomly and picking the coin with the fewest trials so far. A simple exploitation strategy is "go with the winner": if the last outcome was a success, continue with that coin; otherwise, switch to the other coin. A more sophisticated exploitation strategy uses information from all prior flips: picking the coin with the higher estimated chance of success according to Bayes' theorem. This estimate is the mean of the posterior distribution, given by  when observing

when observing  successes out of

successes out of  flips for a coin.

flips for a coin.

These strategies are simple but do not maximize the expected accumulated reward over an unlimited number of trials. The optimal procedure is to compute the Gittins index of each coin, based on the observed number of successes and failures, and then pick the coin with the largest index [2]. This choice will often be the same as the choice with highest expected immediate reward, but not always, especially during the early steps where possible immediate loss from exploring gives a larger eventual gain, on average, from improved information about the coins.

References

[1] J. Cohen, S. McClure, and A. Yu, "Should I Stay or Should I Go? How the Human Brain Manages the Trade-off between Exploitation and Exploration," Philosophical Transactions of the Royal Society B, 362, 2007 pp. 933–942.

[2] J. Gittins, K. Glazebrook, and R. Weber, Multi-armed Bandit Allocation Indices, 2nd ed., Hoboken, NJ: Wiley, 2011.

Permanent Citation