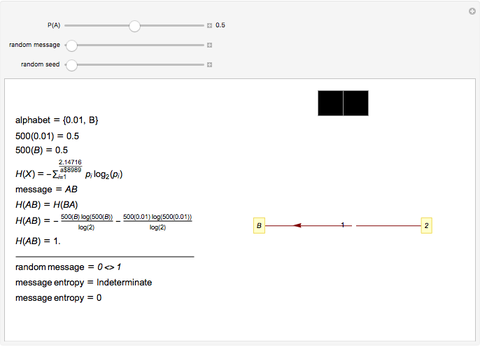

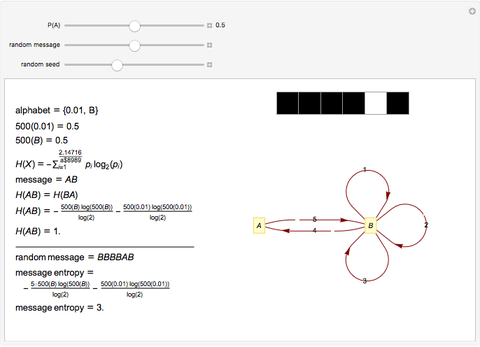

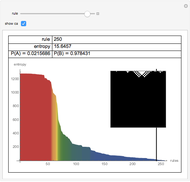

Entropy of a Message Using Random Variables

Initializing live version

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

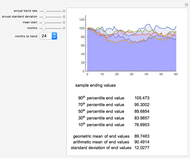

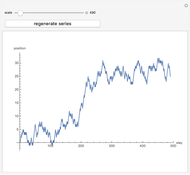

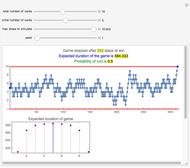

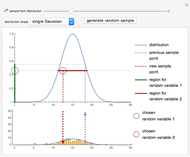

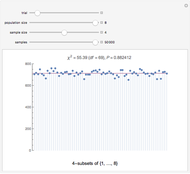

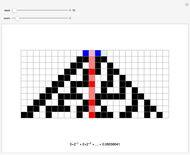

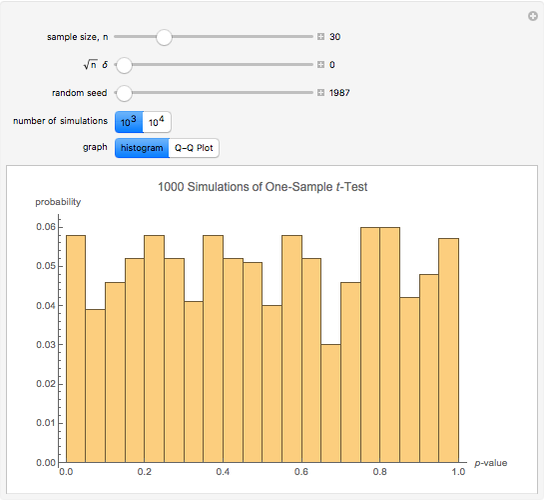

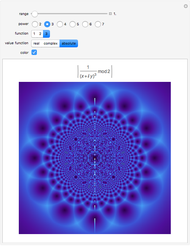

Using the second law of thermodynamics, it is possible to use random variables to calculate the information entropy (or Shannon entropy) of a message, which is a measure of the amount of information in the message. The probabilities that  and

and  occur in the message are

occur in the message are  and

and  .

.

Contributed by: Daniel de Souza Carvalho (March 2011)

Open content licensed under CC BY-NC-SA

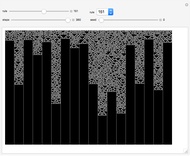

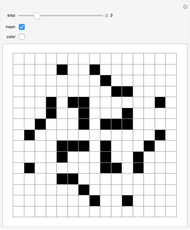

Snapshots

Details

C. E. Shannon and W. Weaver, The Mathematical Theory of Communication, Urbana, IL: University of Illinois Press, 1963.

Permanent Citation