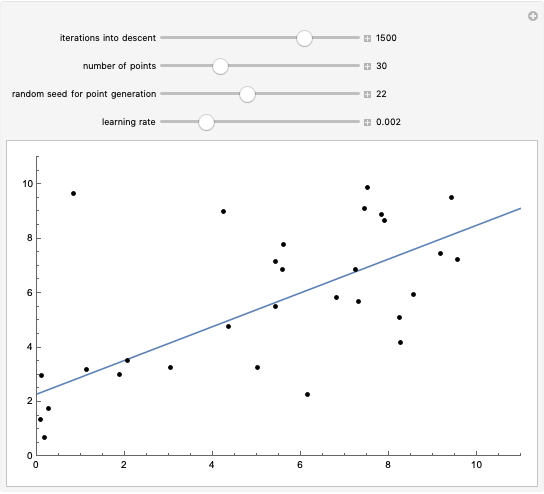

Linear Regression with Gradient Descent

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

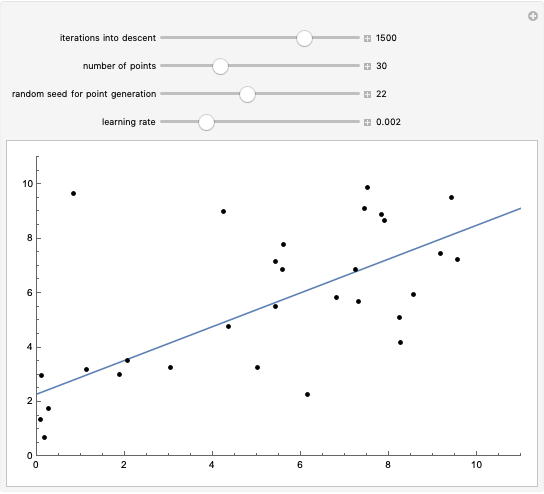

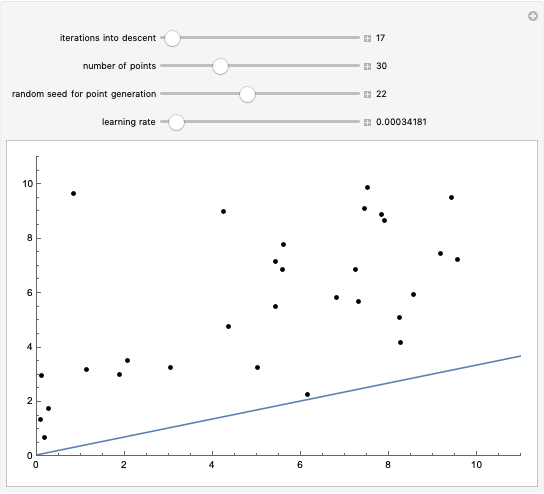

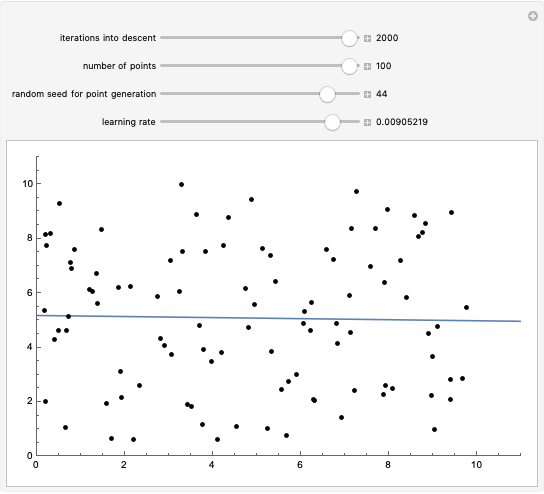

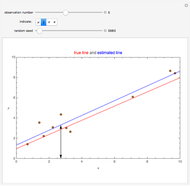

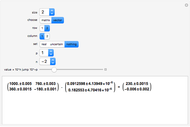

This Demonstration shows how linear regression can determine the best fit to a collection of points by iteratively applying gradient descent. Linear regression works by minimizing the error function:

, where

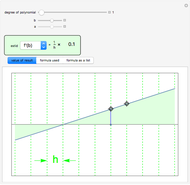

, where  is the number of points. Because it is not always possible to solve for the minimum of this function, gradient descent is used. Gradient descent consists of iteratively subtracting from a starting value

is the number of points. Because it is not always possible to solve for the minimum of this function, gradient descent is used. Gradient descent consists of iteratively subtracting from a starting value  the slope at point

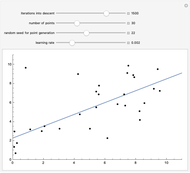

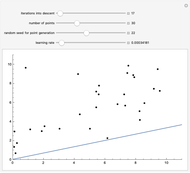

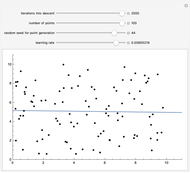

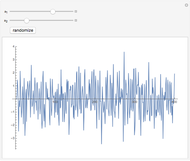

the slope at point  times a constant called the learning rate. You can vary the iterations into gradient descent, the number of points in the dataset, the seed for randomly generating the points and the learning rate.

times a constant called the learning rate. You can vary the iterations into gradient descent, the number of points in the dataset, the seed for randomly generating the points and the learning rate.

Contributed by: Jonathan Kogan (April 2017)

Open content licensed under CC BY-NC-SA

Snapshots

Details

Permanent Citation