Logarithm Seen as the Size of a Number

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

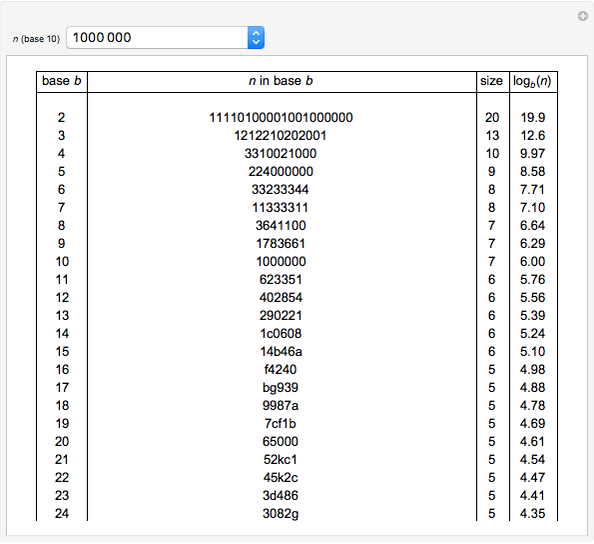

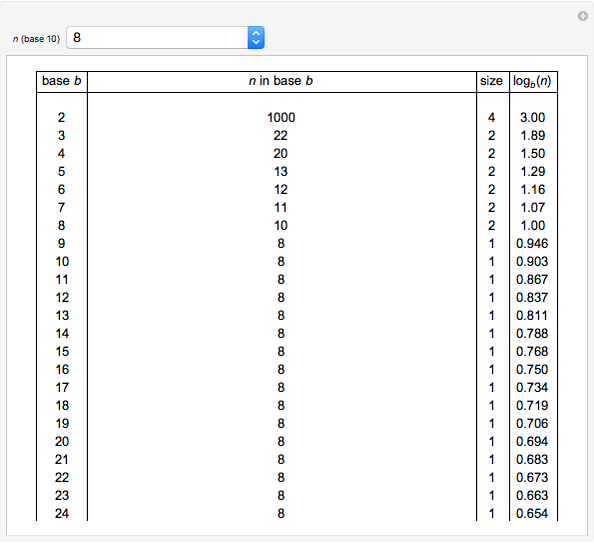

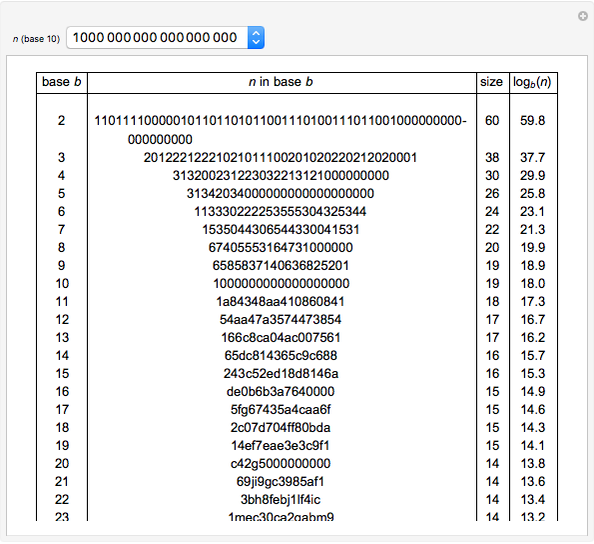

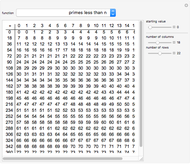

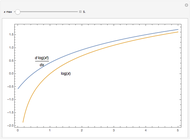

This Demonstration illustrates the fact that the logarithm of a number is its size.

Contributed by: Cedric Voisin (July 2012)

Open content licensed under CC BY-NC-SA

Snapshots

Details

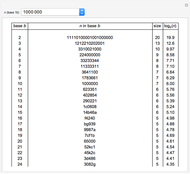

We write numbers in a base  as a series of symbols (digits) having each

as a series of symbols (digits) having each  possible states (values).

possible states (values).

Hence with  symbols, you have

symbols, you have  possible combinations, so

possible combinations, so  can be seen as the number of digits needed to write

can be seen as the number of digits needed to write  .

.

The "log=size" equality is true within an error of 1 because the log is real-valued, whereas the number of digits is an integer. For instance,  but

but  , and

, and  but

but  .

.

Nonetheless, viewing the log merely as the size can be helpful to get some intuition about it and gives interesting insights in various fields where the log is central.

This may help beginners/students to get a better feel for the log than from its usual mathematical definitions and properties (e.g., inverse of exponentiation, integral of  , tool to transform multiplication into addition or to deal with large numbers, etc.).

, tool to transform multiplication into addition or to deal with large numbers, etc.).

It is a pity that this function has been baptized with such a long and complicated name. Many students would have an easier life were it named "size" instead of "logarithm"!

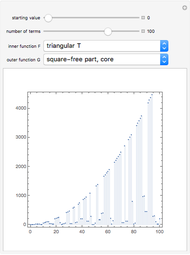

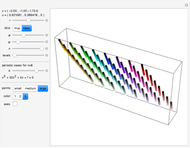

As an advanced example, in information theory,  is the number of different possible states available to each symbol and the log is the quantity of information contained in the sequence of symbols. Then, each digit is called a bit and is the unit of information.

is the number of different possible states available to each symbol and the log is the quantity of information contained in the sequence of symbols. Then, each digit is called a bit and is the unit of information.

If  is the probability of a state, then

is the probability of a state, then  is the number of states with probability

is the number of states with probability  , and

, and  is the number of bits needed to write this number. Then the entropy

is the number of bits needed to write this number. Then the entropy  is the average number of bits (average information) needed to write the number of available states. Nothing is known about the nature of the states, only their number (they can be a dice roll or a gas microstate), so the more there are on average, the more we know we are missing knowledge; hence the usual interpretation of entropy as a measure of our ignorance (and that is why a six-faced dice has a much smaller entropy than a gas).

is the average number of bits (average information) needed to write the number of available states. Nothing is known about the nature of the states, only their number (they can be a dice roll or a gas microstate), so the more there are on average, the more we know we are missing knowledge; hence the usual interpretation of entropy as a measure of our ignorance (and that is why a six-faced dice has a much smaller entropy than a gas).

A symbol with  states can be a digit, but it can also be anything less abstract with a known number of states (coin, dice, atom, molecule, degree of freedom, …). Hence, the entropy in statistical mechanics can be seen more physically as a number of energy containers (

states can be a digit, but it can also be anything less abstract with a known number of states (coin, dice, atom, molecule, degree of freedom, …). Hence, the entropy in statistical mechanics can be seen more physically as a number of energy containers ( states each) present in the system. The physical meaning depends on the base you choose.

states each) present in the system. The physical meaning depends on the base you choose.

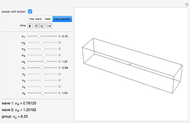

The base  usually depends on the field of application: base 2 in information theory, base 10 in daily decimal system, and the natural base 2.7128 in math/physics.

usually depends on the field of application: base 2 in information theory, base 10 in daily decimal system, and the natural base 2.7128 in math/physics.

In the latter case, though, you have to imagine a virtual symbol/container with 2.7128 states! Indeed,  is the number of states you can get with an infinite collection of one-faced coins! The constant

is the number of states you can get with an infinite collection of one-faced coins! The constant  is a virtual way to deal naturally with a base—or a number of symbols, since

is a virtual way to deal naturally with a base—or a number of symbols, since  —varying continuously instead of discretely.

—varying continuously instead of discretely.

Permanent Citation