Neural Cryptography

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

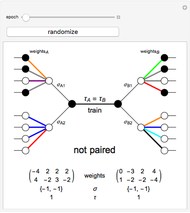

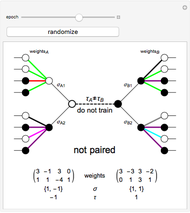

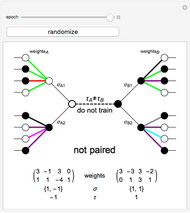

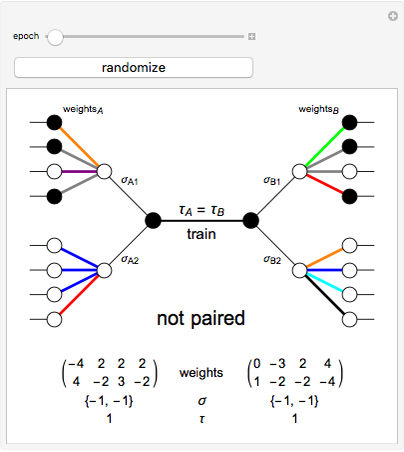

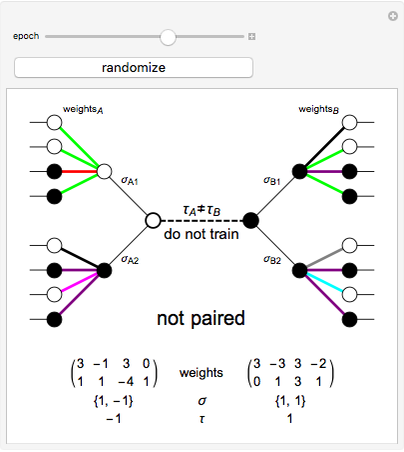

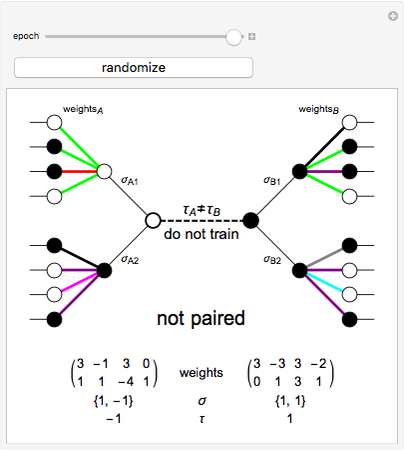

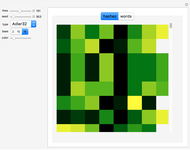

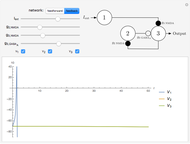

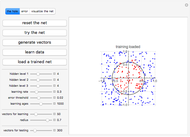

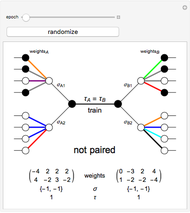

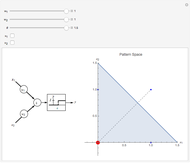

This Demonstration shows how a neural-network key exchange protocol for encrypted communication works using the Hebbian learning rule. The idea is: the person A wants to communicate with the person B, but they cannot exchange a key through a secure channel, so they set two topologically identical neural networks and evaluate them with the same inputs until the weights of their respective networks match.

[more]

Contributed by: Hector Sanchez (December 2011)

Open content licensed under CC BY-NC-SA

Snapshots

Details

A and B set two neural networks with the same size and different random weights values with the property  , where

, where  is the number of possible values the weight can take.

is the number of possible values the weight can take.

The algorithm is:

1. The input of the network is randomized with values  .

.

2. Compute the value of the hidden neurons according to  .

.

3. Compute the value of the output neuron  .

.

4. Compare the outputs of both networks. If the outputs do not match, return to step 1. If they do match, update the weights of the network according to one of the following rules:

The Hebbian Learning Rule (used here):

The Anti-Hebbian Learning Rule:

Random walk:

5. Repeat the process until the weights of both neural networks are equal. The paired key is the value of the weights of the networks.

References

[1] Wikipedia. "Neural Cryptography." (May 19, 2011) en.wikipedia.org/wiki/Neural_cryptography.

[2] N. Prabakaran and P. Vivekanandan, "A New Security on Neural Cryptography with Queries," International Journal of Advanced Networking and Applications, 2(1), 2010 pp. 437-444.

[3] CyberTrone. "Neural Cyptography." The Code Project. (Aug 29, 2009) www.codeproject.com/KB/security/Neural_Cryptography1.aspx.

Permanent Citation