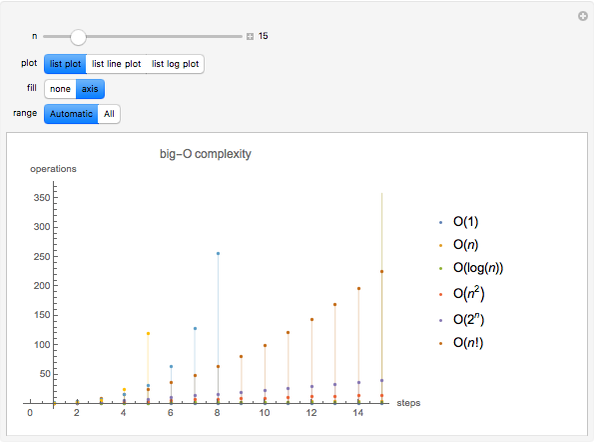

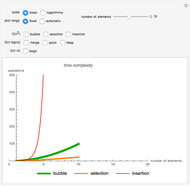

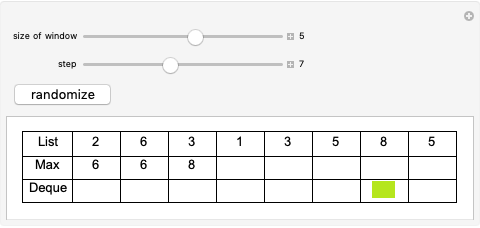

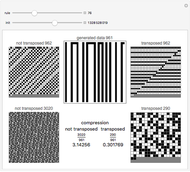

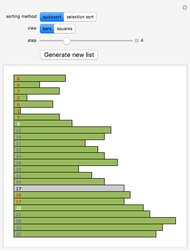

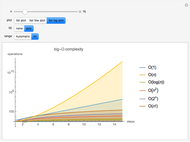

Algorithmic Complexity and Big-O Notation

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

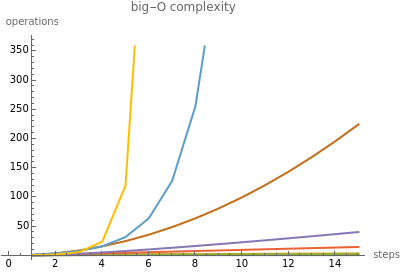

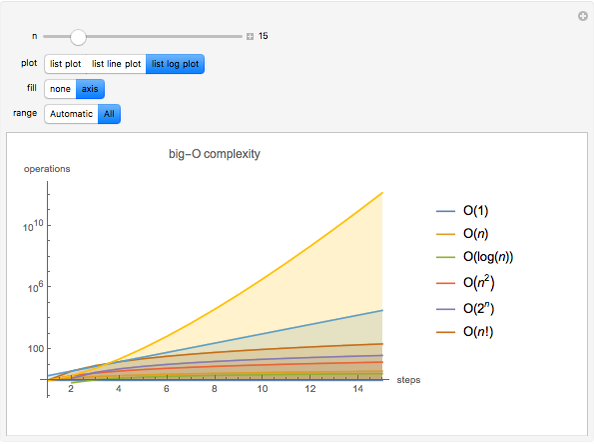

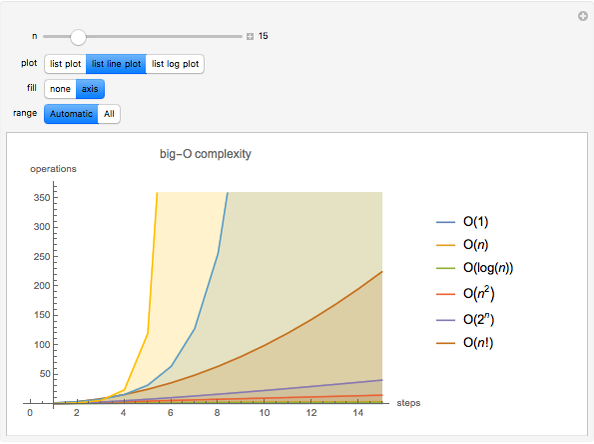

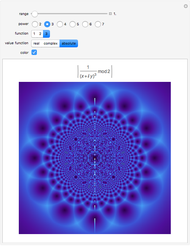

In computer science and mathematics, big-O notation is used to describe algorithmic complexity, a measure of the computational cost required to process data and return a result.

Contributed by: Daniel de Souza Carvalho (August 2015)

Open content licensed under CC BY-NC-SA

Snapshots

Details

detailSectionParagraphPermanent Citation

"Algorithmic Complexity and Big-O Notation"

http://demonstrations.wolfram.com/AlgorithmicComplexityAndBigONotation/

Wolfram Demonstrations Project

Published: August 31 2015