Comparing Iterative Root-Finding Methods

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

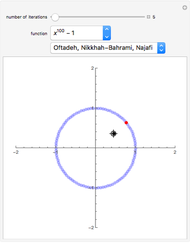

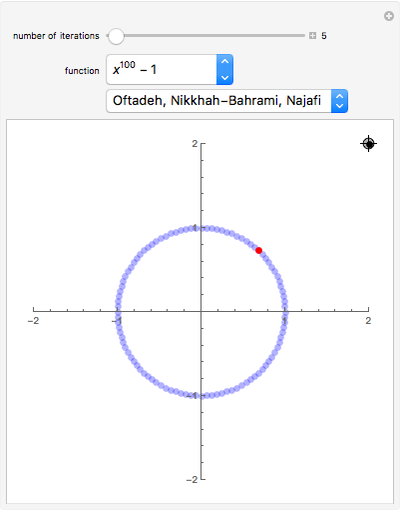

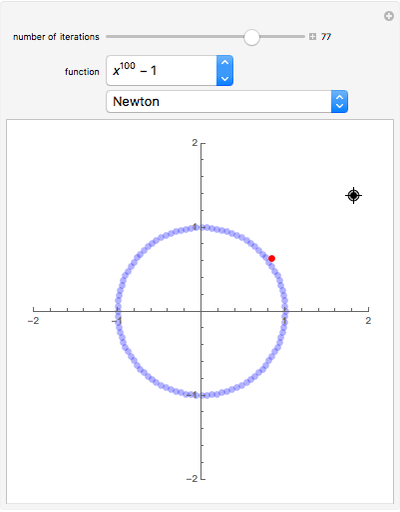

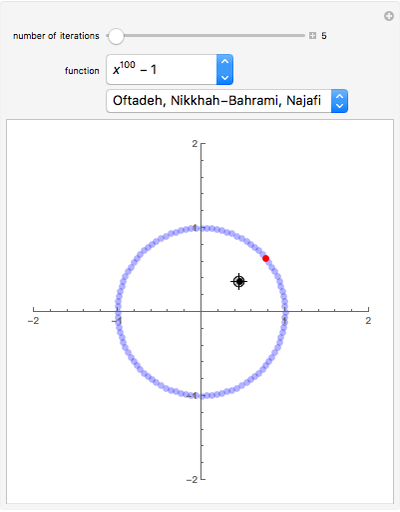

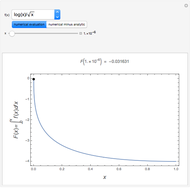

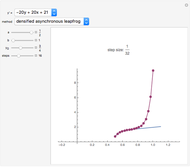

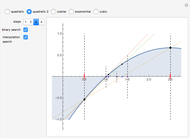

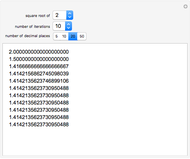

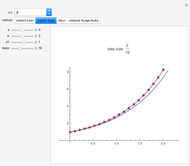

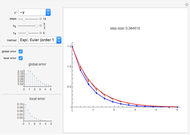

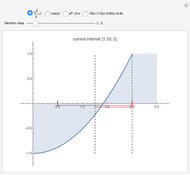

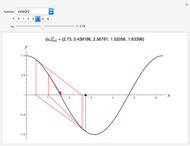

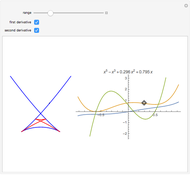

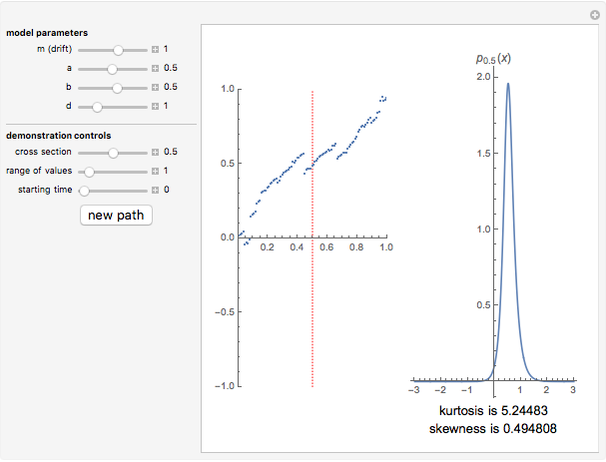

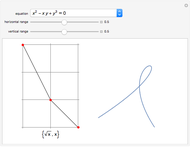

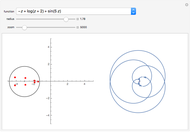

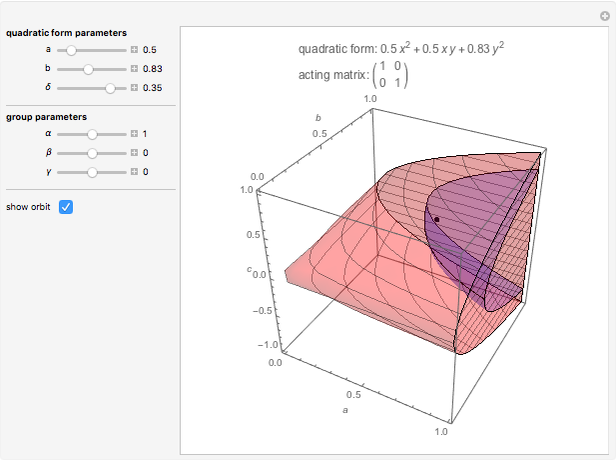

This Demonstration compares the effectiveness of a new iterative method of finding roots of nonlinear equations due to R. Oftadeh, M. Nikkhah–Bahrami, and A. Najafi and the classical Newton–Raphson method (implemented in Mathematica's FindRoot function). Choose a function  from the drop-down menu and the initial guess, which is a complex number

from the drop-down menu and the initial guess, which is a complex number  with

with  ,

,  . The blue points show all the solutions of the equation

. The blue points show all the solutions of the equation  in this square (computed using Reduce). The red point shows the root found by the currently chosen method after the specified number of iterations. By increasing the number of iterations, a better approximation can be found. From these examples it can easily be seen that the new method is considerably more effective than the Newton–Raphson method, in general requiring many fewer iterations. Moreover, one can see some other interesting properties of the new method, like its ability to find complex roots starting with real initial values or starting from very distant initial values.

in this square (computed using Reduce). The red point shows the root found by the currently chosen method after the specified number of iterations. By increasing the number of iterations, a better approximation can be found. From these examples it can easily be seen that the new method is considerably more effective than the Newton–Raphson method, in general requiring many fewer iterations. Moreover, one can see some other interesting properties of the new method, like its ability to find complex roots starting with real initial values or starting from very distant initial values.

Contributed by: Andrzej Kozlowski (March 2011)

Open content licensed under CC BY-NC-SA

Snapshots

Details

The problem of (numerically) finding roots of nonlinear equations is one of the oldest and most thoroughly researched areas of mathematics [1], yet new and surprising approaches are constantly being discovered. One such new approach was published recently in [2]. Their approach is iterative and closely related to the classical Newton–Raphson method, yet in many cases appears to be considerably more effective. The idea of this new approach is very simple. Consider the equation  , where

, where  :

:  is a sufficiently smooth (not necessarily polynomial) complex function. Let

is a sufficiently smooth (not necessarily polynomial) complex function. Let  be our initial "guess" of the value of the root. Suppose we found a smooth function

be our initial "guess" of the value of the root. Suppose we found a smooth function  , which is invertible in some neighborhood of

, which is invertible in some neighborhood of  . The Taylor expansion for

. The Taylor expansion for  in a neighborhood of

in a neighborhood of  gives

gives  (with

(with  this is just the usual Taylor expansion). We will use the function

this is just the usual Taylor expansion). We will use the function  as an approximation of

as an approximation of  , and seek

, and seek  such that

such that  , which amounts to

, which amounts to  . The resulting iterative scheme

. The resulting iterative scheme  is convergent of order 3 rather than 2 as is the case with the usual Newton–Raphson method, but it does not require the computation of second-order derivatives. The choice of a suitable

is convergent of order 3 rather than 2 as is the case with the usual Newton–Raphson method, but it does not require the computation of second-order derivatives. The choice of a suitable  is not unique; the ones considered in [1] are

is not unique; the ones considered in [1] are  ,

,  , and

, and  for suitable

for suitable  . Different choices of

. Different choices of  work better for different types of equations.

work better for different types of equations.

Here we use only the first form. The method has been extended to multivariate equations in [3]. It has many interesting properties that can be seen in the Demonstration, including the ability to find complex roots starting with real initial values.

References

[1] J. Stoer and R. Bulirsch, Introduction to Numerical Analysis, 2nd ed., New York: Springer 1993.

[2] R. Oftadeh, M. Nikkhah–Bahrami, and A. Najafi, "A Novel Cubically Convergent Iterative Method for Computing Complex Roots of Nonlinear Equations," Applied Mathematics and Computation, 217(6), 2010 pp. 2608–2618.

[3] M. Nikkhah–Bahrami and R. Oftadeh, "An Effective Iterative Method for Computing Real and Complex Roots of Systems of Nonlinear Equations," Applied Mathematics and Computation, 215(5), 2009 pp. 1813–1820.

Permanent Citation