Monte Carlo Expectation-Maximization (EM) Algorithm

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

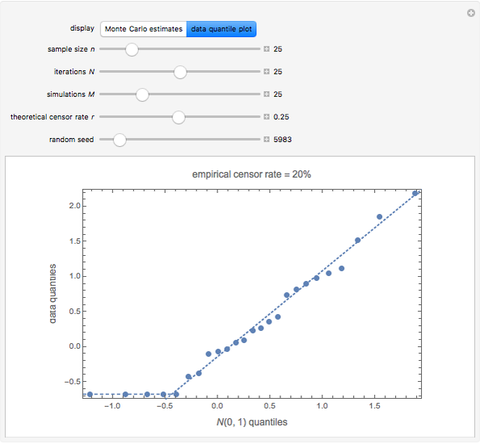

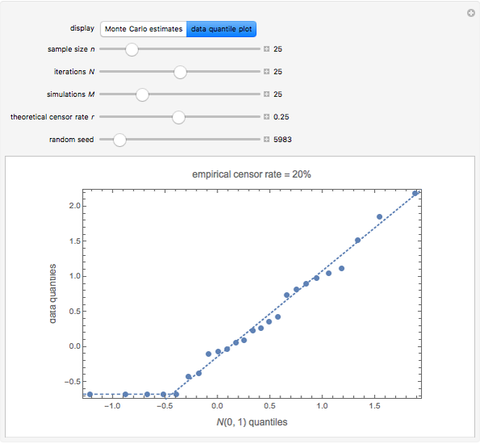

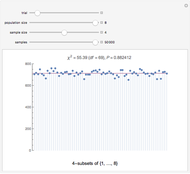

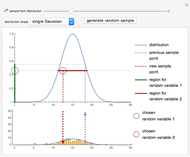

The Monte Carlo expectation-maximization (EM) algorithm is used to estimate the mean in a random sample of size  from a left-censored standard normal distribution with censor point,

from a left-censored standard normal distribution with censor point,  , where

, where  is the censor rate and

is the censor rate and  is the inverse cumulative distribution function of the standard normal distribution. The random sample consists of

is the inverse cumulative distribution function of the standard normal distribution. The random sample consists of  non-censored observations and

non-censored observations and  censored observations. You can see these observations in the quantile plot along with the empirical censor rate,

censored observations. You can see these observations in the quantile plot along with the empirical censor rate,  .

.

Contributed by: Ian McLeod and Nagham Muslim Mohammad (July 2013)

Department of Statistical and Actuarial Sciences, Western University

Open content licensed under CC BY-NC-SA

Snapshots

Details

Snapshot 1: This shows the quantile plot of the data used in the thumbnail. You see from the plot that  and that there were five censored values, so the empirical censor rate was 20%.

and that there were five censored values, so the empirical censor rate was 20%.

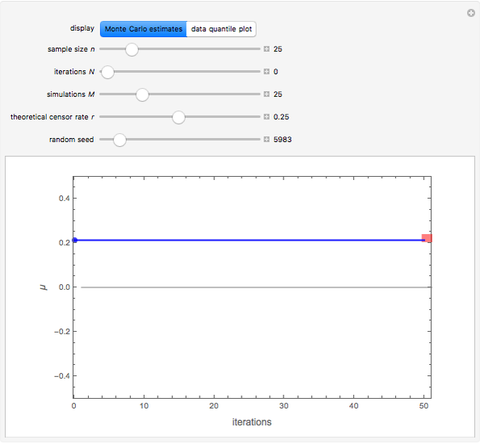

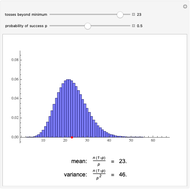

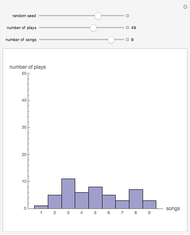

Snapshot 2: The initial point is obtained as the mean of  random variables generated from the right-truncated normal distribution

random variables generated from the right-truncated normal distribution  with mean parameter

with mean parameter  , where

, where  is the sample mean of original data, including the censored values. Some crude data augmentation algorithms used in practice stop at this point. The plot shows this does not usually provide an accurate result.

is the sample mean of original data, including the censored values. Some crude data augmentation algorithms used in practice stop at this point. The plot shows this does not usually provide an accurate result.

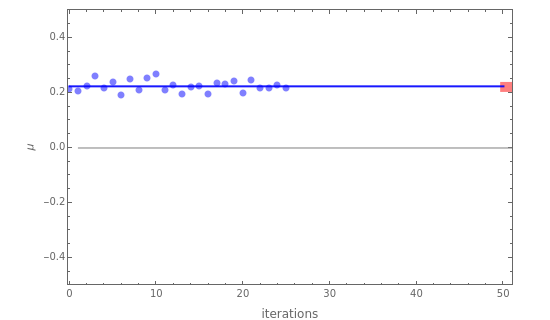

Snapshot 3: Increasing the number of iterations from 0 to 5 greatly improves the accuracy. The average of these iterations is very close to the deterministic EM result.

Snapshot 4: Increasing both the number of iterations and the number of simulations shows convergence to the deterministic EM result.

Snapshot 5: Using only  iterations and

iterations and  simulations but increasing the data sample size from

simulations but increasing the data sample size from  to

to  results in estimates that more accurately estimate the true value,

results in estimates that more accurately estimate the true value,  . Also note the decrease in variability as the iterations increase.

. Also note the decrease in variability as the iterations increase.

The Monte Carlo EM algorithm for the censored normal distribution is discussed in [1, p. 533].

A new discovery illustrated in this Demonstration is that a more accurate estimate may be obtained by using the average of the Monte Carlo EM iterates, as shown by the fact that the horizontal blue line segment has nearly the same ordinate as the red one. This is what is usually done in Markov chain Monte Carlo (MCMC) applications [2], and the Monte Carlo EM algorithm may be viewed as a special case of MCMC [3].

References

[1] C. R. Robert and G. Casella, Monte Carlo Statistical Methods, New York: Springer, 2004.

[2] C. J. Geyer, "Introduction to Markov Chain Monte Carlo" in Handbook of Markov Chain Monte Carlo (S. Brooks, A. Gelman, G. L. Jones, and X.-L. Meng, eds.), CRC Press: Boca Raton, 2011.

[3] D. A. van Dyk and X.-L. Meng, "The Art of Data Augmentation," Journal of Computational and Graphical Statistics, 10(1), 2001 pp. 1–50. doi:10.1198/10618600152418584.

Permanent Citation