Expected Motion in 2x2 Symmetric Games Played by Reinforcement Learners

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

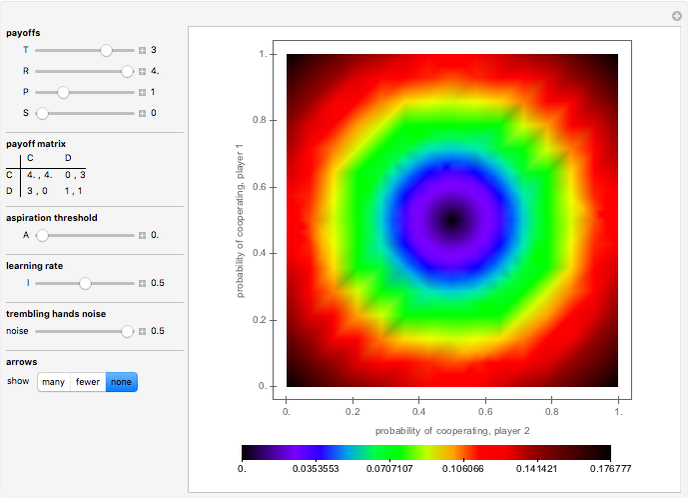

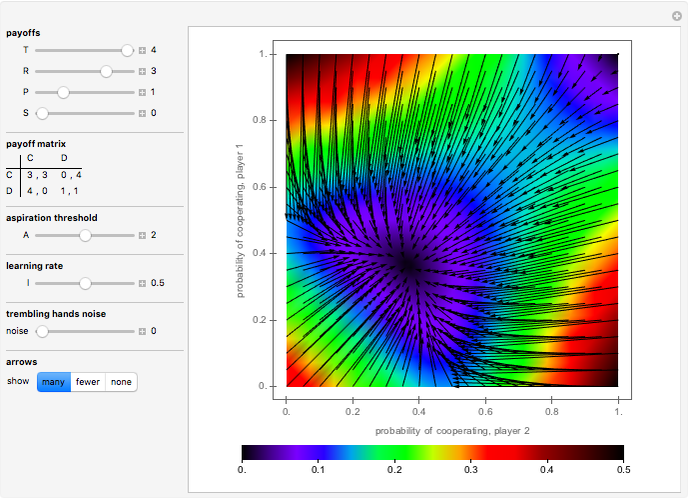

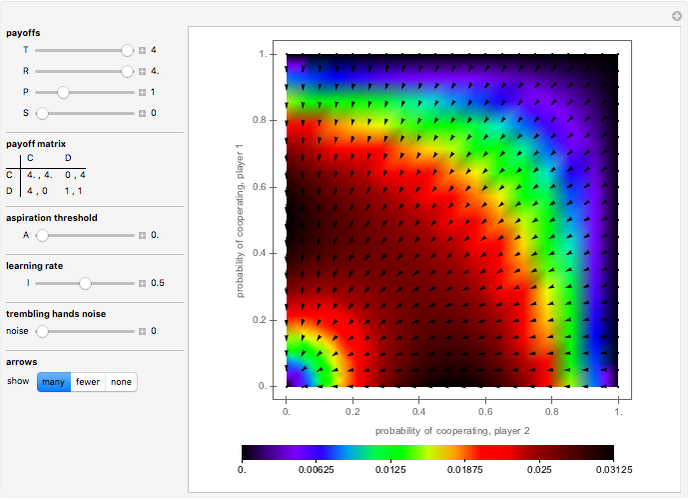

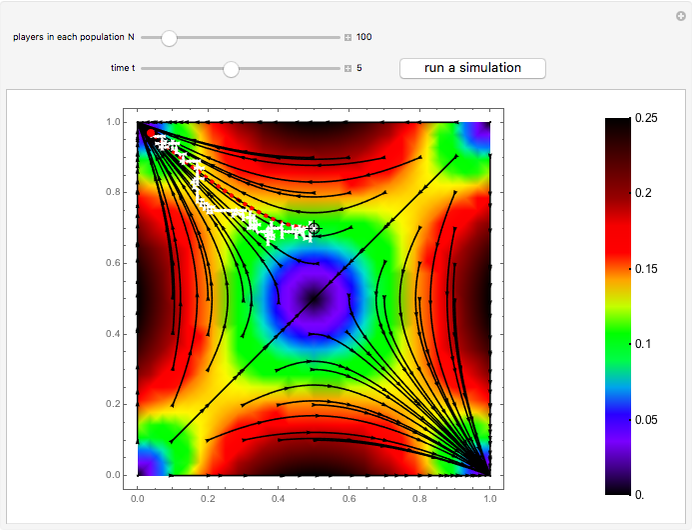

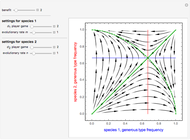

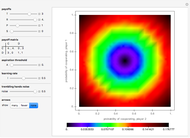

The figure shows the expected motion of a system where two players using the Bush–Mosteller reinforcement learning algorithm play a symmetric  game.

game.  (for temptation) is the payoff a defector gets when the other player cooperates;

(for temptation) is the payoff a defector gets when the other player cooperates;  (for reward) is the payoff obtained by both players when they both cooperate; both players obtain a payoff of

(for reward) is the payoff obtained by both players when they both cooperate; both players obtain a payoff of  (for punishment) when they both defect; and finally,

(for punishment) when they both defect; and finally,  (for sucker) is the payoff a cooperator gets when the other player defects. Parameter

(for sucker) is the payoff a cooperator gets when the other player defects. Parameter  denotes both players' aspiration threshold, and

denotes both players' aspiration threshold, and  is their learning rate. Noise is the probability that a player undertakes the opposite action she or he intended. The arrows represent the expected motion at various states of the system. The background is colored using the norm of the expected motion.

is their learning rate. Noise is the probability that a player undertakes the opposite action she or he intended. The arrows represent the expected motion at various states of the system. The background is colored using the norm of the expected motion.

Contributed by: Luis R. Izquierdo and Segismundo S. Izquierdo (April 2008)

Open content licensed under CC BY-NC-SA

Snapshots

Details

Reinforcement learners tend to repeat actions that led to satisfactory outcomes in the past, and avoid choices that resulted in unsatisfactory experiences. This behavior is one of the most widespread adaptation mechanisms in nature. This Demonstration shows the expected motion of a system where two players using the Bush–Mosteller reinforcement learning algorithm play a symmetric  game. Mathematical analyses conducted by the contributors of this Demonstration show that the expected motion displayed in the figure is especially relevant to characterize the transient dynamics of the system, particularly with small learning rates, but, on the other hand, this expected motion can be misleading when studying the asymptotic behavior of the model. Further information at http://luis.izqui.org and http://segis.izqui.org.

game. Mathematical analyses conducted by the contributors of this Demonstration show that the expected motion displayed in the figure is especially relevant to characterize the transient dynamics of the system, particularly with small learning rates, but, on the other hand, this expected motion can be misleading when studying the asymptotic behavior of the model. Further information at http://luis.izqui.org and http://segis.izqui.org.

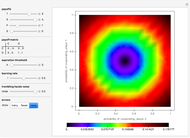

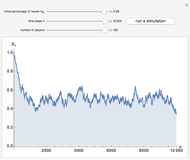

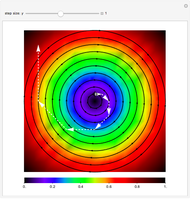

Snapshot 1: stag hunt game ( ;

;  ;

;  ;

;  ) played by two players with aspiration threshold equal to 0 and learning rate equal to 0.5; the noise is equal to 0.5, which means that players are making their decisions at random

) played by two players with aspiration threshold equal to 0 and learning rate equal to 0.5; the noise is equal to 0.5, which means that players are making their decisions at random

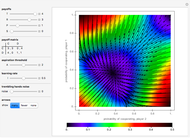

Snapshot 2: prisoner's dilemma game ( ;

;  ;

;  ;

;  ) played by two players with aspiration threshold equal to 2 and learning rate equal to 0.5

) played by two players with aspiration threshold equal to 2 and learning rate equal to 0.5

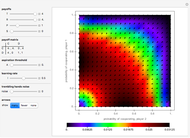

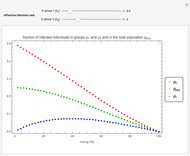

Snapshot 3: a game ( ;

;  ;

;  ;

;  ) played by two players with aspiration threshold equal to 0 and learning rate equal to 0.5

) played by two players with aspiration threshold equal to 0 and learning rate equal to 0.5

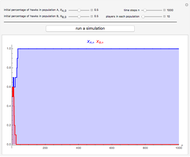

Snapshot 4: a coordination game ( ;

;  ;

;  ;

;  ) played by two players with aspiration threshold equal to 0 and learning rate equal to 0.5

) played by two players with aspiration threshold equal to 0 and learning rate equal to 0.5

Permanent Citation