Richardson Extrapolation Applied Twice to Accelerate the Convergence of an Estimate

Requires a Wolfram Notebook System

Interact on desktop, mobile and cloud with the free Wolfram Player or other Wolfram Language products.

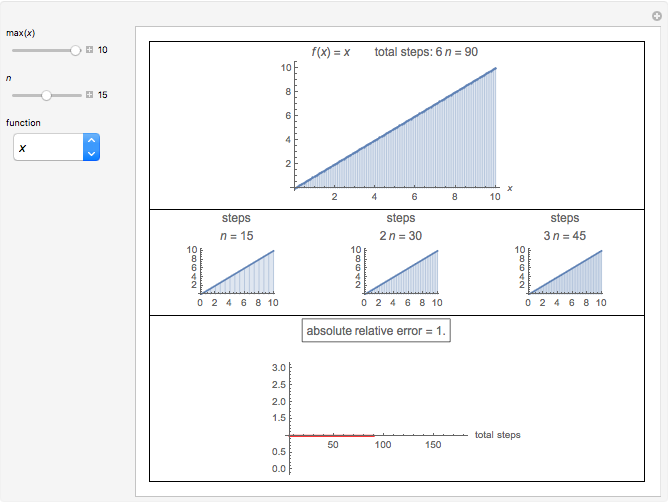

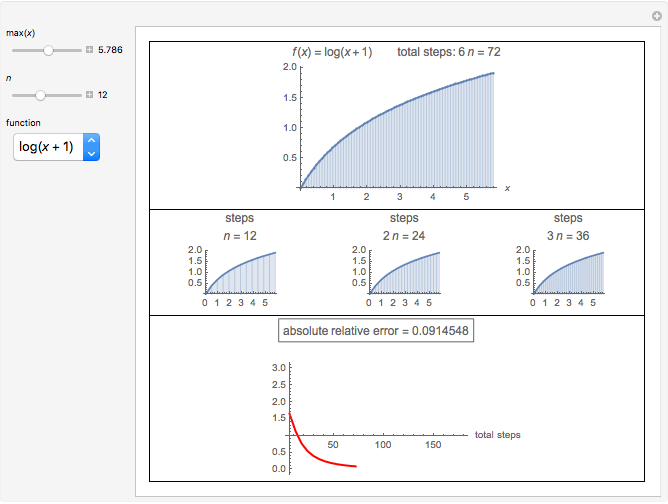

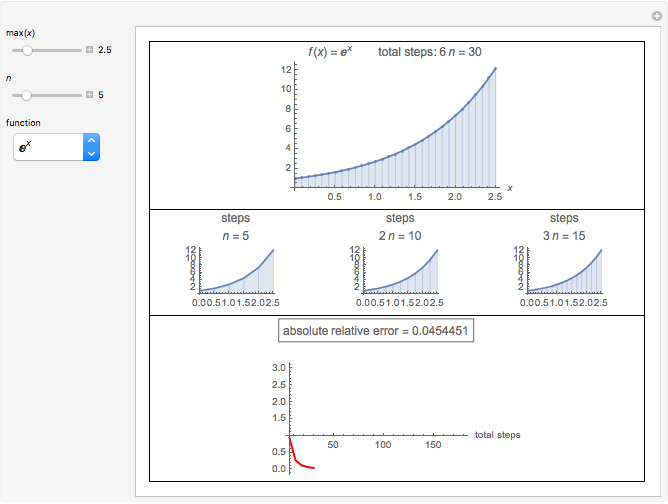

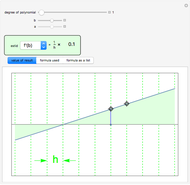

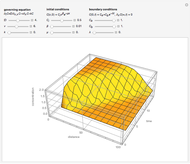

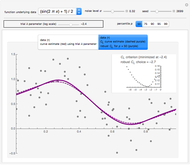

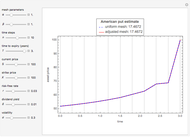

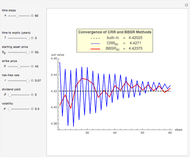

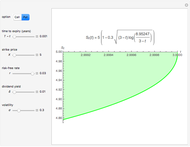

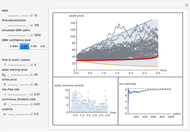

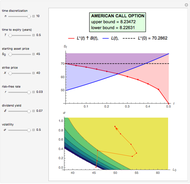

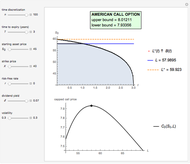

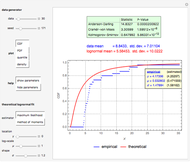

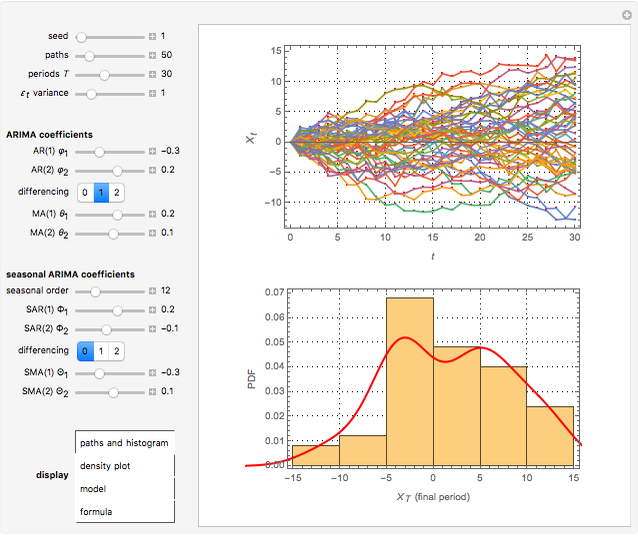

This Demonstration shows Richardson extrapolation applied twice to accelerate the convergence of an estimate.

[more]

Contributed by: Michail Bozoudis (July 2014)

Suggested by: Michail Boutsikas

Open content licensed under CC BY-NC-SA

Snapshots

Details

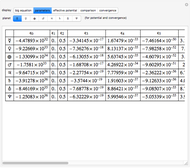

Let  be an approximation of the exact value

be an approximation of the exact value  of the integral of

of the integral of  that depends on a positive step size

that depends on a positive step size  with an error formula of the form

with an error formula of the form

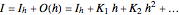

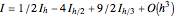

,

,

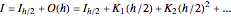

where the  are known constants. For step sizes

are known constants. For step sizes  and

and  ,

,

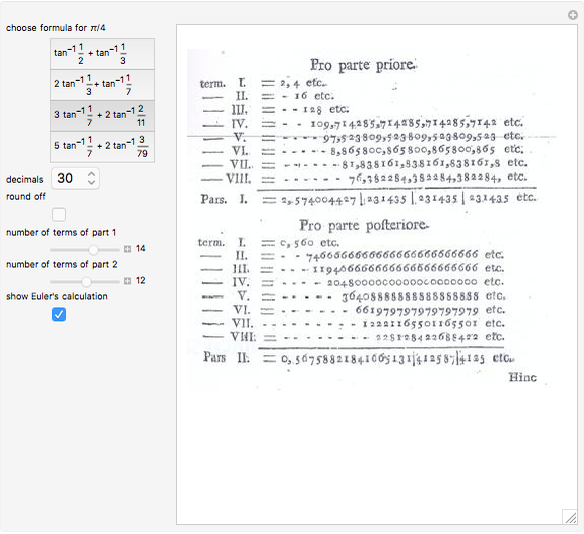

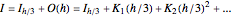

To apply Richardson extrapolation twice, multiply the last two equations by  and

and  , respectively, and then, by adding all equations, the two error terms of the lowest order disappear:

, respectively, and then, by adding all equations, the two error terms of the lowest order disappear:

.

.

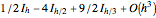

Notice that the approximations  and

and  require the same computational effort, yet the errors are

require the same computational effort, yet the errors are  and

and  , respectively.

, respectively.

Permanent Citation